Brain-computer interface

Contents

Introduction

A Brain-computer interface (BCI) is a technological system of communication that is based on neural activity generated by the brain [1]. It’s comprised of four main parts: a means for acquiring neural signals from the brain, a method for isolating the desired specific features in that signal, an algorithm to decode the signals obtained, and a method for transforming the decoding into an action (Figure 1) [2] [3]. This method of communication is independent of the normal output pathways of peripheral nerves and muscles, and the signal can be acquired by using invasive or non-invasive techniques [1]. This technology can help to provide a means of communication for people disabled by neurological diseases or injuries, giving them a new channel of output for the brain. It can also enhance functions in healthy individuals [1] [2] [3]. BCIs are also named brain-machine interfaces (BMIs) [4].

The central nervous system (CNS) responds to stimuli in the environment or in the body by producing an appropriate output that can be in the form of a neuromuscular or hormonal response. A BCI provides a new output for the CNS that is different from the typical neuromuscular and hormonal ones. It changes the electrophysiological signals from reflections of the CNS activity (such as an electroencephalography – or EEG - rhythm or a neuronal firing rate) into the intended products of that activity, such as messages and commands that act on the world and accomplish the person’s intent [5]. Since it measures CNS activity, converting it into an artificial output, it can replace, restore, or enhance the natural CNS output, changing the interactions between the CNS and its internal or external environment [3].

The electrical signals produced by brain activity can be detected on the scalp, on the cortical surface, or within the brain. As mentioned previously, the BCI has the function of translating these electrical signals into outputs that allow the user to communicate without the peripheral nerves and muscles. This becomes relevant because, since the BCI does not depend on neuromuscular control, it can provide another way of communication for people with disorders such as amyotrophic lateral sclerosis (ALS), brainstem stroke, cerebral palsy and spinal cord injury [4]. It needs to be mentioned that a BCI also depends on feedback and on the adaptation of brain activity based on that feedback. According to McFarland and Wolpaw (2011), “communication and control applications are interactive processes that require the user to observe the results of their efforts in order to maintain good performance and to correct mistakes [4].” The BCI system needs to provide feedback and interact with the adaptations the brain makes in response. The general BCI operation, therefore, depends on the interaction between the user’s brain (where the signals produced are measured by the BCI), and the BCI itself (that translates the signals into specific commands) [5]. One of the most difficult challenges in BCI research is the management of the complex interactions between the concurrent adaptations of the CNS and the BCI [3].

Even though the main objective of BCI research and development is the creation of assistive communication and control technology for disabled people with different ailments, BCIs also have potential as a new type of interface for interacting with a computer or machine for people with normal neurological function. This could be applied to the general population in areas such as gaming, for example, or in high-stress situations like air traffic control. There could also be systems that enhance or supplement human performance such as image analysis, and systems that expand the media access or artistic expression. There has been some research into another possible application for the BCI technology: assistance in the rehabilitation of people disabled by a stroke and other acute events [2] [3].

The biology of BCIs

Since the BCI includes both a biological and technological component, without specific characteristics of the biological factor that can be used, the system would not work. The technology works because of the way our brains function [6]. The human brain (arguably the most complex signal processing machine in existence) is capable of transducing a variety of environmental signals and to extract information from them in order to produce behavior, cognition, and action [2] [3]. The brain has a myriad of neurons - individual nerve cells connected to one another by dendrites and axons. The actions of the brain are carried out by small electric signals generated by differences in electric potential carried by ions on the membranes of the neurons. Even though the signal pathways are insulated by myelin, there is a residual electric signal that escapes and that can be detected, interpreted, and used, such as in the case of BCIs. This also allows for the development of technologies that send signals into specific regions of the brain. By connecting a camera that could send the same signals as the eye (or close enough) to the brain, a blind person could regain some measure of vision [6].

The non-invasive recording of the electrical brain activity by electrodes on the surface of the scalp has been known for over 80 years, due to the work of Hans Berger. His observations demonstrated that the electroencephalogram (EEG) could be used as “an index of the gross state of the brain.” Besides the detection of electrical signals from the brain, neural activity can also be monitored by measuring magnetic fields or hemoglobin oxygenation using sensors on the scalp, the surface of the brain, or within the brain [4].

Dependent and independent BCIs

The commands that the user sends to the external world through the BCI system do not follow the same output pathways of peripheral nerves and muscles. Instead, a BCI provides the user with an alternative method for acting on the world. The BCIs can be placed in two different classes: dependent and independent [5]. These terms appeared in 2002, and both are used to describe BCIs that use brain signals for the control of applications. The difference between them is in how they depend on natural CNS output [3].

A dependent BCI uses brains signals that depend on muscle activity [3], such as in the case of a BCI that present the user with a matrix of letters. Each letter flashes one at a time, and it is the objective of the user to select a specific letter by looking directly at it. This initiates a visual evoked potential (VEP) that is recorded from the scalp. The VEP produced when the right intended letter flashes is greater than the VEPs produced when other letters flash. In this example, the brain’s output channel is EEG, but the generation of the signal that is detected is dependent on the direction of the gaze which, in turn, depends on extraocular muscles and the cranial nerves that activate them [5].

An independent BCI, on the contrary, does not depend on natural CNS output; there is no need for muscle activity to generate the brain signals, since the message is not carried by peripheral nerves and muscles [3] [5]. This is more advantageous for people who are severely disabled by neuromuscular disorders. An independent BCI would present the user with a matrix of letters that flash one at a time. The user would select a specific letter by producing a P300 evoked potential when the chosen latter flashed. According to McFarland and Wolpaw (2011), “the P300 is a positive potential occurring around 300 msec after an event that is significant to the subject. It is considered a “cognitive” potential since it is generated in tasks when subjects attend and discriminate stimuli. (…) The fact that the P300 potential reflects attention rather than simply gaze direction implied that this BCI did not depend on muscle (i.e., eye-movement) control. Thus, it represented a significant advance [4].” The brain’s output channel, in this case, would be EEG, and the generation of the EEG signal depends on the user’s intent and not on the precise orientation of the eyes. This kind of BCI is of greater theoretical interest since it provides the brain with new output pathways. Also, for people with the most severe neuromuscular disabilities, independent BCIs are probably more useful since they lack all normal output channels [5].

There is also another term that has been used recently: hybrid BCI. According to He et al. (2013) this can be applied to a BCI that employs two different types of brain signals, such has VEPs and sensorimotor rhythms) to produce its outputs, or to a system that combines a BCI output and a natural muscle-based output [3].

Invasive and non-invasive BCIs

BCIs can also be classified into two different classes by the way the neural signals are collected. When the signals are monitored using implanted arrays of electrodes it is called invasive system. This is common in experiments involving rodents and nonhuman primates, and the invasive system is suited for decoding activity in the cerebral cortex. These type of systems provide measurements with a high signal-to-noise ratio (SNR) and also allow for the decoding of spiking activity from small populations of neurons [2]. The downside of the invasive system is that it causes a significant amount of discomfort and risk to the user [1]. In turn, noninvasive systems such as the EEG acquire the signal without the need for surgical implementation. The ongoing challenge with noninvasive techniques is the low SNR, although there have been some developments with the EEG that provide a substantial increase in the SNR [2].

Brief overview of the development of Brain-Computer Interfaces

For a long time, there was speculation that a device such as an electroencephalogram, which can record electrical potentials generated by brain activity, could be used to control devices by taking advantage of the signals obtained by it [1]. In the 1960s there where the first demonstrations of BCIs technology. These were made in 1964 by Grey Walter, which used a signal recorded on the scalp by EEG to control a slide projector. Ebenhard Fetz also helped advance the development of BCIs teaching monkeys to control a meter needle by changing the firing rate of a single cortical neuron. Moving forward to the 1970s, Jacques Vidal developed a system that determined the eye-gaze direction using the scalp-recorded visual evoked potential over the visual cortex to determine the direction in which the user wanted to move a computer cursor. The term brain-computer interface can be traced to Vidal [4]. During 1980, Elbert T. and colleagues demonstrated that people could learn to control slow cortical potentials (SCPs) in scalp-recorded RRG activity. This was used to adjust the vertical position of a rocket image moving on a TV screen. Still in the 1980s, more specifically in 1988, Farwell and Donchin proved that people could use the P300 event-related potentials to spell words on a computer screen. Another major development was when Wolpaw and colleagues trained people to control the amplitude of mu and beta rhythms – sensorimotor rhythms – using the EEG recorded over the sensorimotor cortex. They demonstrated that users could use the mu and beta rhythms to move a computer cursor in one or two dimensions [3].

The research of BCIs increased rapidly in the mid-1990s, continuing to grow into the present years. During the past 20 years, the research has covered a broad range of areas that are relevant to the development of BCI technology, such as basic and applied neuroscience, biomedical engineering, materials engineering, electrical engineering, signal processing, computer science, assistive technology, and clinical rehabilitation [3].

Bain-Computer Interface components

A BCI, in order to achieve the desired output that reflects the user’s intent, has to detect and measure features of brain signals. It has an input, for example, the electrophysiological activity from the user, components that translate input into output, a device command (output), and a protocol that determines the onset, offset, how the timing of the operation is controlled, how the feature translation process is parameterized, the nature of the commands that the BCI produces, and how errors in translation are handled [3] [5]. The BCI system can be divided into four basic components: signal acquisition, feature extraction, feature translation, and device output commands [3].

The first component, signal acquisition, is responsible for measuring the brains signals, and the adequate acquisition of this signal is important for the function of any BCI. The objective of this part of the BCI system is to detect the voluntary neural activity created by the user, whether by invasive or noninvasive means. To achieve this, some kind of sensor is used, such as scalp electrodes for electrophysiological activity or functional magnetic resonance imaging (fMRI) for hemodynamic activity. The component amplifies the signals obtained for subsequent processing. It may also filter them in order to remove noise like the power line interference, at 60 or 50 Hz. The received signals that were amplified are digitized and sent to a computer [3].

The next component, feature extraction, analyses those digitized signals with the objective of isolation the signal features. These are specific characteristics in the signal such as power in specific EEG frequency bands or firing rates of individual cortical neurons. There are several feature extraction procedures for the digitized signal such as the spatial filtering, voltage amplitude measurements, spectral analyses or single-neuron separation [1] [3] [5]. The features extracted are expressed in a compact form that is suited for translation into output commands. These features to be effective need to have a strong correlation with the user’s intent. It is important that artifacts such as electromyogram from cranial muscles are avoided or eliminated to ensure the accurate measurement of the desired signal features [3].

After the features have been extracted, these are provided to the feature translation algorithm that converts them into commands for the output device, which will achieve the user’s intent. The translation algorithm should adapt to spontaneous or learned changes in the user’s signal features. This is important “in order to ensure that the user’s possible range of feature values covers the full range of device control and also to make control as effective and efficient as possible [3].” The translation algorithms include linear equations, nonlinear methods such as neural networks, and other classification techniques. Independently of its nature, these algorithms change independent variables (the signal features) into dependent variables, that are the device control commands [5] [7] (5; 7).

Finally, the commands that were produced by the feature translation algorithm are the output of the BCI. They are sent to the application and a result is created like a selection of a letter, controlling a cursor, robotic arm operation, wheelchair movement, or any other number of desired outcomes. The realization of the operation of the device provides feedback to the user, closing the control loop [3].

BCI signals

As mentioned above, brain signals acquired by different methods can be used as BCI inputs. But not all signals are the same: they can differ substantially in regards to topographical resolution, frequency content, area of origin, and technical needs. For example, their resolution can range from EEG – that has millimeter resolution – to electrocorticogram (ECoG), with its millimeter resolution, to neuronal action potentials that have tens-of-microns resolution. The main issue when considering signals for BCI usage is what signals can best indicate the user’s intent [3] [5].

Sensorimotor rhythms were first reported by Wolpaw et al. (1991) for cursor control. These are EEG rhythms that vary according to movement or the imagination of movement and are spontaneous, not requiring specific stimuli for their occurrence [3] [5]. The P300 type of signal is an endogenous event-related potential component in the EEG [3]. It is a positive potential that occurs around 300 msec after an event that has significance to the user. The BCIs based on the P300 potential do not depend on muscle control, such as eye movement since it reflects attention rather than simply gaze direction. Both sensorimotor rhythms and the P300 have demonstrated that the noninvasive acquiring of these brain signals can be used for communication and control of devices [4].

Other possibilities for BCI signals have been explored, such as using the single-neuron activity that can be acquired by microelectrodes implanted in the cortex. This research has been tried in humans but mainly in non-human primates. Another batch of studies demonstrated that recording electrocorticographic (ECoG) activity from the surface of the brain is also a viable method to produce signals for a BCI system. Both of this studies prove the viability of invasive methods to gather brain signals that could be useful for BCIs. However, there are also issues regarding their suitability and reliability for long-term use in humans [3] [4].

Besides electrophysiological measures, there are other types of signals that can be useful: Magnetoencephalography (MEG), functional magnetic resonance imaging (fMRI), and near-infrared systems (fNIR). For recording MEG and fMRI, presently, the technology is still expensive and bulky, reducing the probabilities of them being used for practical applications in the near future in regards to BCIs. fNIR can be cheaper and more compact, but since it is based on changes in cerebral blood flow (like fMRI), which is a slow response, this could impact when applied to a BCI system. In conclusion, currently, electrophysiological features are the most practical signals for BCI technology [4].

Invasive and noninvasive techniques for acquiring signals

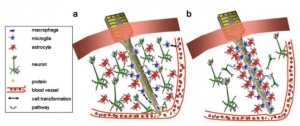

Brain signals acquired by invasive methods are mainly accomplished by electrophysiologic recording from electrodes that are implanted, neurosurgically, on the inside of the person’s brain or over the surface of the brain. The area of the brain that has been the preferred site for implanting electrodes has been the motor cortex, due to its accessibility and large pyramidal cells that produce measurable signals that can be generated by actual or imaginary motor movements [3]. The advantage of the invasive techniques is their high spatial and temporal resolution since it is possible to record individual neurons at a very high sampling rates. The signals recorded intracranially can obtain more information and allow for quicker responses. This, in turn, may lead to decreased requirements of training and attention on the part of the user when comparing to noninvasive methods. However, there are some issues with invasive methods that need to be taken into account. First, the long-term stability and reliability of the signal over days and years that it is expected that a person would be able to use the implanted device. There is a need for the user to consistently be able to generate the control signal reliably without frequent retuning [1] [3]. Secondly, the quality of the signal over long time periods is important. The brain tissue around a specific region where a device has been implanted will react after the electrode insertion (figure 2). This reaction includes not only damage to the local tissue but also irritation at the electrode-tissue surface [3]. The third issue relates to if the device includes a neuroprosthesis that requires a stimulus to activate the disabled limb. The additional stimulus could also produce a significant effect on the neural circuits that might interfere with the signal of interest. The BCI systems must accurately detect and remove this kind of artifacts [1] [3].

Success has been limited with invasive techniques applied to humans, although there has not been a lot of experiment with human subjects. To improve the suitability of the invasive method there is a need for further advancements in microelectrodes in order to obtain stable recordings over a long term. For the widespread use of invasive techniques in humans, it would also be necessary more research to decrease of the number of cells required for simultaneous recording to obtain a useful signal, and to provide feedback to the nervous system via electrical stimulation through electrodes [1].

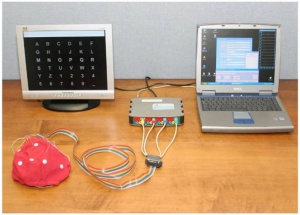

Contrarily to invasive techniques, noninvasive methods reduce the risk for users since surgery or permanent attachment to the device is not required [3]. There are several techniques that belong to this category that have been used to measure brain activity noninvasively such as computerized tomography (CT), positron electron tomography (PET), single-photon emission computed tomography (SPECT), magnetic resonance imaging (MRI), functional magnetic resonance imaging (fMRI), magnetoencephalography (MEG), and electroencephalography (EEG) [1]. EEG is the most prevalent method of signal acquisition for BCIs, having high temporal resolution that is capable of measuring changes in brain activity that occur within a few msec. Although the resolution of EEG is not on the same level as that of implanted methods, signals from up to 256 electrode sites can be measured at the same time [1] [3]. EEG is practical in a laboratory setup (figure 3) or in a real-world setting, it is portable, inexpensive, and has a vast literature of past performance [3].

Applications

There are a number of disorders that disrupt the neuromuscular pathways through which the brain communicates with and controls its external environment. Disorders like the amyotrophic lateral sclerosis (ALS), brainstem stroke, brain or spinal cord injury, cerebral palsy, muscular dystrophies, multiple sclerosis, and others undermine the capacity of the neural pathways that control muscles or impair the muscles [5].

An option for to restore function to people with motor impairments is to provide the brain with a non-muscular communication and control channel. A BCI can, therefore, convey messages and commands to the external world, and the potential of these systems for helping handicapped people is obvious [1] [5].He et al. (2013) mentions that “a BCI output could replace natural output that has been lost to injury or disease. Thus, someone who cannot speak could use a BCI to spell words that are then spoken by a speech synthesizer. Or one who has lost limb control could use a BCI to operate a powered wheelchair [3].”

A BCI output could enhance natural CNS output. For example, as a method to prevent the loss of attention when someone is engaged in a task that requires constant focus. A BCI could detect the brain activity that precedes break in attention and create an output (a sound for example) that would alert the person. It could also supplement natural CNS output, such as in the case of a person that uses a BCI to control a third robotic arm, for example, or to choose items when a user that is controlling the position of the cursor selects them. In these cases, the BCI supplements the natural neuromuscular output with another, the artificial output. Finally, the BCI output could improve the natural CNS output. As an example, a person whose arm movements are compromised by a sensorimotor cortex damaged by a stroke could use a BCI system to measure signals from the damaged areas and then excite muscles or control an orthosis that would improve arm movement [3].

See Also

Neurable - Building BCI for VR and AR

Neuralink - Elon Musk's company to develop implantable brain–computer interface

References

- ↑ 1.00 1.01 1.02 1.03 1.04 1.05 1.06 1.07 1.08 1.09 1.10 1.11 Vallabhaneni, A., Wang, T. and He, B. (2005). Brain-Computer Interface. Neural Engineering, Springer US, pp. 85-121

- ↑ 2.0 2.1 2.2 2.3 2.4 2.5 Sajda, P., Müller, KR. and Shenoy, K. V. (2008). Brain-Computer Interfaces. IEEE Signal Processing Magazine, 25(1): 16-17

- ↑ 3.00 3.01 3.02 3.03 3.04 3.05 3.06 3.07 3.08 3.09 3.10 3.11 3.12 3.13 3.14 3.15 3.16 3.17 3.18 3.19 3.20 3.21 3.22 3.23 3.24 3.25 3.26 3.27 3.28 3.29 3.30 3.31 He, B., Gao, S., Yuan, H. and Wolpaw, J. R. (2013). Brain-Computer Interfaces. Neural Engineering, Springer US, pp 87-151

- ↑ 4.0 4.1 4.2 4.3 4.4 4.5 4.6 4.7 4.8 McFarland, D. J. and Wolpaw, J. R. (2011). Brain-Computer Interfaces for Communication and Control. Commun ACM, 54(5): 60–66

- ↑ 5.00 5.01 5.02 5.03 5.04 5.05 5.06 5.07 5.08 5.09 5.10 5.11 5.12 Wolpaw, J. R., Birbaumer, N., McFarland, D. J., Pfurtscheller, G. and Vaughan, T. M. (2002). Brain-Computer Interfaces for Communication and Control. Clinical Neurophysiology 113: 767–791

- ↑ 6.0 6.1 Grabianowski, E. How Brain-Computer Interfaces Work. Retrieved from computer.howstuffworks.com/brain-computer-interface.htm

- ↑ Wolpaw, J. R., Birbaumer, N., Heetderks, W. J., McFarland, D. J., Peckham, P.H., Schalk, G., Donchin, E., Quatrano, L. A., Robinson, C. J. and Vaughan, T. M. (2000). Brain-Computer Interface Technology: A Review of the First International Meeting. IEEE Transactions on Rehabilitation Engineering, 8(2): 164-173